Preview the new Azure Storage SDK for Go & Storage SDKs roadmap

We are excited to announce the new and redesigned Azure Storage SDK for Go with documentation and examples available today. This new SDK was redesigned to follow the next generation design philosophy for Azure Storage SDKs and is built on top of code generated by AutoRest, an open source code generator for the OpenAPI specification.

Azure Storage Client Libraries have evolved dramatically in the past few years, supporting many development languages from C++ to JavaScript. As we strive to support all platforms and programming languages, we have decided to use AutoRest to accelerate delivering new features in more languages. In the Storage SDK for Go, AutoRest produces what we call the protocol layer. The new SDK uses this internally to communicate with the Storage service.

Currently, the new Azure Storage SDK for Go supports only Blob storage, but we will be releasing Files and Queue support in the future. Note that all these services will be packaged separately, something we started doing recently with the Azure Storage SDK for Python. Expect to see split packages for all the Storage Client Libraries in the future. This reduces the library footprint dramatically if you only use one of the Storage services.

What's new?

- Layered SDK architecture: Low-level and high-level APIs

- There is one SDK package per service (REST API) version for greater flexibility. You can choose to use an older REST API version and avoid breaking your application. In addition, you can load multiple packages side by side.

- Built on code generated by AutoRest

- Split packages for Blob, Files and Queue services for reduced footprint

- A new extensible middleware pipeline to mutate HTTP requests and responses

- Progress notification for upload and download operations

Layered SDK architecture

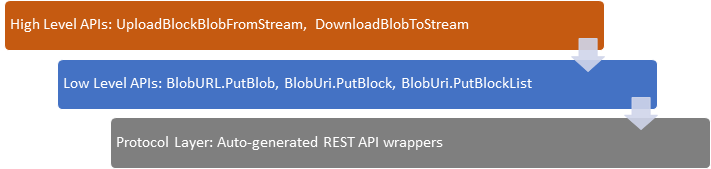

The new Storage SDK for Go consists of 3 layers that simplifies the programming experience, as well as improves the debuggability. The first layer is the auto generated layer that consists of private classes and functions. You will be able to generate this layer using AutoRest in the future. Stay tuned!

The second layer is a stateless, thin wrapper that maps one-to-one to the Azure Storage REST API operations. As an example, a BlobURL object offers methods like PutBlob, PutBlock, and PutBlockList. Calling one of these APIs will result in a single REST API request, as well as a number of retries in case the first REST call fails.

The third layer consists of high level abstractions for your convenience. One example is UploadBlockBlobFromStream which will call a number of PutBlock operations depending on the size of the stream being uploaded.

Hello World

Following is a Hello World example using the new Storage SDK for Go. Check out the full example on GitHub.

// From the Azure portal, get your Storage account's name and key and set environment variables.

accountName, accountKey := os.Getenv("ACCOUNT_NAME"), os.Getenv("ACCOUNT_KEY")

// Use your Storage account's name and key to create a credential object.

credential := azblob.NewSharedKeyCredential(accountName, accountKey)

// Create a request pipeline that is used to process HTTP(S) requests and responses.

p := azblob.NewPipeline(credential, azblob.PipelineOptions{})

// Create an ServiceURL object that wraps the service URL and a request pipeline.

u, _ := url.Parse(fmt.Sprintf("https://%s.blob.core.windows.net", accountName))

serviceURL := azblob.NewServiceURL(*u, p)

// All HTTP operations allow you to specify a Go context.Context object to

// control cancellation/timeout.

ctx := context.Background() // This example uses a never-expiring context.

// Let's create a container

fmt.Println("Creating a container named 'mycontainer'")

containerURL := serviceURL.NewContainerURL("mycontainer")

_, err := containerURL.Create(ctx, azblob.Metadata{}, azblob.PublicAccessNone)

if err != nil { // An error occurred

if serr, ok := err.(azblob.StorageError); ok { // This error is a Service-specific

switch serr.ServiceCode() { // Compare serviceCode to ServiceCodeXxx constants

case azblob.ServiceCodeContainerAlreadyExists:

fmt.Println("Received 409. Container already exists")

break

default:

// Handle other errors ...

log.Fatal(err)

}

}

}

// Create a URL that references a to-be-created blob in your

// Azure Storage account's container.

blobURL := containerURL.NewBlockBlobURL("HelloWorld.txt")

// Create the blob with string (plain text) content.

data := "Hello World!"

putResponse, err := blobURL.PutBlob(ctx, strings.NewReader(data),

azblob.BlobHTTPHeaders{ContentType: "text/plain"}, azblob.Metadata{},

azblob.BlobAccessConditions{})

if err != nil {

log.Fatal(err)

}

fmt.Println("Etag is " + putResponse.ETag())

Progress reporting with the new Pipeline package

One of the frequently asked features for all Storage Client Libraries have been the ability to track transfer progress in bytes. This is now available in the Storage SDK for Go. Here is an example:

// From the Azure portal, get your Storage account's name and key and set environment variables.

accountName, accountKey := os.Getenv("ACCOUNT_NAME"), os.Getenv("ACCOUNT_KEY")

// Create a request pipeline using your Storage account's name and account key.

credential := azblob.NewSharedKeyCredential(accountName, accountKey)

p := azblob.NewPipeline(credential, azblob.PipelineOptions{})

// From the Azure portal, get your Storage account blob service URL endpoint.

cURL, _ := url.Parse(

fmt.Sprintf("https://%s.blob.core.windows.net/mycontainer", accountName))

// Create a ContainerURL object that wraps the container URL and a request

// pipeline to make requests.

containerURL := azblob.NewContainerURL(*cURL, p)

ctx := context.Background() // This example uses a never-expiring context

// Here's how to create a blob with HTTP headers and metadata (I'm using

// the same metadata that was put on the container):

blobURL := containerURL.NewBlockBlobURL("Data.bin")

// requestBody is the stream of data to write

requestBody := strings.NewReader("Some text to write")

// Wrap the request body in a RequestBodyProgress and pass a callback function

// for progress reporting.

_, err := blobURL.PutBlob(ctx,

pipeline.NewRequestBodyProgress(requestBody,

func(bytesTransferred int64) {

fmt.Printf("Wrote %d of %d bytes.n", bytesTransferred, requestBody.Len())

}),

azblob.BlobHTTPHeaders{

ContentType: "text/html; charset=utf-8",

ContentDisposition: "attachment",

}, azblob.Metadata{}, azblob.BlobAccessConditions{})

if err != nil {

log.Fatal(err)

}

// Here's how to read the blob's data with progress reporting:

get, err := blobURL.GetBlob(ctx, azblob.BlobRange{}, azblob.BlobAccessConditions{}, false)

if err != nil {

log.Fatal(err)

}

// Wrap the response body in a ResponseBodyProgress and pass a callback function

// for progress reporting.

responseBody := pipeline.NewResponseBodyProgress(get.Body(),

func(bytesTransferred int64) {

fmt.Printf("Read %d of %d bytesn.", bytesTransferred, get.ContentLength())

})

downloadedData := &bytes.Buffer{}

downloadedData.ReadFrom(responseBody)

// The downloaded blob data is in downloadData's buffer

What's next?

Storage SDK for Go roadmap:

- General availability

- Files and Queue packages

- New Storage features like archive and blob tiers coming soon

- Convenience features like parallel file transfer for higher throughput

Roadmap for the rest of the Storage SDKs:

- Split packages for Blob, Files, and Queue services coming soon for .NET and Java, followed by all other Storage Client Libraries

- OpenAPI (a.k.a Swagger) specifications for AutoRest

- Completely new asynchronous Java Client Library using the Reactive programming model

Developer survey & feedback

Please let us know how we’re doing by taking the 5-question survey. We are actively working on the features you’ve requested, and the annual survey is one of the easiest ways for you to influence our roadmap!

Sercan Guler and Jeffrey Richter

Source: Azure Blog Feed