HDInsight tools for Visual Studio Code: simplifying cluster and Spark job configuration management

We are happy to announce that HDInsight Tools for Visual Studio Code (VS Code) now leverage VS Code built-in user settings and workspace settings to manage HDInsight clusters and Spark job submissions. With this feature, you can manage your linked clusters and set your preferred Azure environment with VS Code user settings. You can also set your default cluster and manage your job submission configurations via VS Code workspace settings.

HDInsight Tools for VS Code can access HDInsight clusters in Azure regions worldwide. To grant more flexible access to HDInsight clusters, you can access the clusters through your Azure subscriptions, by linking to your HDInsight cluster using your Ambari username and password, or by connecting to an HDInsight Enterprise Security Package Cluster via the domain name and password. All Azure settings and linked HDInsight clusters are kept in VS Code user settings for your future use. The Spark job submission can support up to a hundred parameters to give you the flexibility to maximize cluster computing resources usage, and also allow you to specify the right parameters to optimize your Spark job performance. By leveraging the VS Code workspace setting, you have the flexibility to specify parameters in JSON format.

Summary of new features

- Leverage VS Code user settings to manage your cluster and environments.

- Set Azure Environment: Choose command HDInsight: Set Azure Environment. The specified Azure environment will be your default Azure environment for cluster navigation, data queries, and job submissions.

- Link a Cluster: Choose command HDInsight: Link a Cluster. The linked cluster information is saved in user settings.

- Use the VS Code workspace setting to manage your PySpark job submission.

- Set Default Cluster: Choose command HDInsight: Set Default Cluster. The specified cluster will be your default cluster for PySpark or Hive data queries and job submissions.

- Set Configurations: Choose command HDInsight: Set Configurations to specify parameter values for your Spark job Livy configurations.

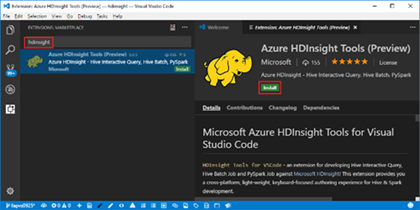

How to install or update

First, install Visual Studio Code and download Mono 4.2.x (for Linux and Mac). Then get the latest HDInsight Tools by going to the VSCode Extension repository or the VSCode Marketplace and searching HDInsight Tools for VSCode.

For more information about HDInsight Tools for VSCode, please use the following resources:

For more information about HDInsight Tools for VSCode, please use the following resources:

- User Manual: HDInsight Tools for VSCode

- User Manual: Set Up PySpark Interactive Environment

- Demo Video: HDInsight for VSCode Video

- Hive LLAP: Use Interactive Query with HDInsight

Learn more about today’s announcements on the Big Data blog. Discover more on the Azure service updates page.

If you have questions, feedback, comments, or bug reports, please use the comments below or send a note to hdivstool@microsoft.com.

Source: Azure Blog Feed