Performance updates and tuning best practices for using Azure Database for PostgreSQL

In March 2018 Microsoft announced general availability of Azure Database for PostgreSQL, a simple and fully managed database service for PostgreSQL that removes the complexities around infrastructure management, data availability, protection, and scale. The service continues to see tremendous growth, and in the months since Amit Banerjee's blog posting Performance best practices for using Azure Database for PostgreSQL we have added even more performance improvements to the service. In addition, through hands-on experience with customers' workloads we have determined several best practices for tuning workloads. This post describes service performance improvements and performance tuning best practices to consider based on the characteristics of your workload. We recommend testing all these best practices because of the potential tradeoffs associated with each one.

Service improvements

The recent service improvements relate to storage and CPU optimizations resulting in faster IO latency and CPU efficiency. I/O intensive workloads and read heavy workloads will experience the most benefit from these improvements.

Tuning best practices

There are many ways to optimize your PostgreSQL database and applications for better performance. In this post, we share ideas around optimizing:

- Autovacuum

- Bulk inserts

- Client applications

Optimizing autovacuum

PostgreSQL uses MVCC to allow greater database concurrency. Every update results in an insert and delete, and every delete results in the row(s) being soft-marked for deletion. This results in dead tuples that have to be purged later. PostgreSQL achieves this by running a vacuum job.

The vacuum job can be triggered manually or automatically. There will be more dead tuples when the database is experiencing heavy update or delete operations and fewer when idle, making the need for running vacuum more frequently under load. This makes running vacuum jobs manually inconvenient.

Autovacuum on the other hand, can be configured and benefits from tuning. The default values that PostgreSQL ships with try to ensure the product works on all kinds of devices including Raspberry Pis, and the ideal configuration values depend on a number of factors:

- Total resources available – SKU and storage size.

- Resource usage.

- Individual object characteristics.

Why run autovacuum?

If you don't run vacuum from time to time, the dead tuples that accumulate can result in:

- Data bloat – larger databases and tables.

- Larger sub-optimal indexes.

- Increased I/O.

Monitoring bloat/vacuum (queries)

The following sample query is designed to identify the number of dead and live tuples in a table named "foo":

SELECT relname, n_dead_tup, n_live_tup, (n_dead_tup/ n_live_tup) AS DeadTuplesRatio, last_vacuum, last_autovacuum FROM pg_catalog.pg_stat_all_tables WHERE relname = 'foo' order by n_dead_tup DESC;

Autovacuum configurations

The configuration parameters that control autovacuum revolve around two key questions:

- When should it start?

- How much should it clean after it starts?

Below are some of the autovacuum configuration parameters that you can update based on the above questions, along with some guidance:

|

Parameter |

Description |

Default value |

|

autovacuum_vacuum_threshold |

Specifies the minimum number of updated or deleted tuples needed to trigger a |

50 |

|

autovacuum_vacuum_scale_factor |

Specifies a fraction of the table size to add to autovacuum_vacuum_threshold when deciding whether to trigger a |

5 percent |

|

autovacuum_vacuum_cost_limit |

Specifies the cost limit value that will be used in automatic |

-1 |

|

autovacuum_vacuum_cost_delay |

Specifies the cost delay value that will be used in automatic |

20ms |

|

autovacuum_nap_time |

Specifies the minimum delay between autovacuum runs on any given database. In each round the daemon examines the database and issues |

15s |

|

autovacuum_max_workers |

Specifies the maximum number of autovacuum processes (other than the autovacuum launcher) that may be running at any one time. The default is three. This parameter can only be set at server start. |

3 |

The above configurations can be updated using the Azure portal or Azure CLI.

Autovacuum costs

These are the costs of running a vacuum operation:

- Takes a lock on the data pages vacuum runs on.

- Uses compute and memory when running.

This implies that vacuum shouldn't run either too frequently or too infrequently, it needs to be adaptive to the workload. We recommend testing all autovacuum parameter changes due to the tradeoffs of each one.

When does it start?

Autovacuum is triggered when the number of dead tuples exceeds autovacuum_vacuum_threshold + autovacuum_vacuum_scale_factor * reltuples, reltuples here is a constant.

Cleanup from autovacuum needs to keep up with the database load, otherwise you could run out of storage and experience a general slowdown in queries. This means that amortized over time, the rate at which vacuum cleans up dead tuples should equal the rate at which dead tuples are created.

Databases with many updates/deletes have more dead tuples and need more space. Generally, databases with many updates/deletes benefit from low values of autovacuum_vacuum_scale_factor and low values of autovacuum_vacuum_threshold to prevent prolonged accumulation of dead tuples. You could use higher values for both parameters with smaller databases because the need for vacuum there is less urgent. Note that frequent vacuuming comes at the cost of compute and memory.

Also note that the default scale factor of 20 percent works fine for tables with a low percent of dead tuples, but not for tables with a high percentage of dead tuples. For example, on a 20 GB table this translates to 4 GB of dead tuples and on a 1 TB table it’s 200 GB of dead tuples.

With PostgreSQL you can set these parameters at the table level or instance level. Today, these parameters can be set at the table level only in Azure Database for PostgreSQL.

How much should autovacuum clean when it starts?

Since there is a cost to running autovacuum, there are parameters for controlling the runtime of vacuum operations. The following parameters help estimate the cost of running vacuum:

-

vacuum_cost_page_hit = 1

-

vacuum_cost_page_miss = 10

-

vacuum_cost_page_dirty = 20

The vacuum process reads physical pages and checks for dead tuples. Every page in shared_buffers is considered to have a cost of 1 (vacuum_cost_page_hit), every other page is considered to have a cost of 20 (vacuum_cost_page_dirty) if it has dead tuples or 10 (vacuum_cost_page_miss) if there are no dead tuples. The vacuum operation stops when the process exceeds autovacuum_vacuum_cost_limit, which is the parameter to control the cost of vacuuming.

After the limit is reached, the process sleeps for the duration specified by the autovacuum_vacuum_cost_delay parameter before being invoked again. If the limit is not reached, autovacuum starts after the value specified by the autovacuum_nap_time parameter.

In summary, the autovacuum_vacuum_cost_delay and autovacuum_vacuum_cost_limit parameters control how much data cleanup is allowed per unit time. Note, that the default values are too low for most pricing tiers. The optimal values for these parameters are pricing tier-dependent and should be configured accordingly.

The autovacuum_max_workers parameter determines the maximum number of autovacuum processes that can be running simultaneously.

With PostgreSQL you can set these parameters at the table level or instance level. Today, these parameters can be set at the table level only in Azure Database for PostgreSQL.

Optimizing autovacuum per table

All the configuration parameters above may be configured per table, for example:

ALTER TABLE t SET (autovacuum_vacuum_threshold = 1000); ALTER TABLE t SET (autovacuum_vacuum_scale_factor = 0.1); ALTER TABLE t SET (autovacuum_vacuum_cost_limit = 1000); ALTER TABLE t SET (autovacuum_vacuum_cost_delay = 10);

Autovacuum is a per table synchronous process. The larger percent of dead tuples a table has, the harder it will be to autovacuum. Splitting tables that have a high rate of updates/deletes in smaller chunks will help to parallelize autovacuum and reduce the time it takes to complete an autovacuum on one table. You can also increase the number of parallel autovacuum workers to ensure workers are liberally scheduled.

References

PostgreSQL documentation – Chapter 18, Server Configuration.

PostgreSQL documentation – Chapter 24, Routine Database Maintenance Tasks.

Optimizing bulk inserts

For customers that have workload operations that involve transient data or that insert large datasets in bulk, consider using unlogged tables.

Unlogged tables is a PostgreSQL feature that can be used effectively to optimize bulk inserts. PostgreSQL uses Write-Ahead Logging (WAL), which provides atomicity and durability two of the ACID properties by default. Inserting into an unlogged table would mean PostgreSQL would do inserts without writing into the transaction log, which itself is an I/O operation, making these tables considerably faster than ordinary tables.

However, they are not crash-safe. An unlogged table is automatically truncated after a crash or subject to an unclean shutdown. The contents of an unlogged table are also not replicated to standby servers. Any indexes created on an unlogged table are automatically unlogged as well. After the insert operation completes, you may convert the table to logged so the insert is durable.

On some customer workloads, we have experienced approximately a 15-20 percent performance improvement when using unlogged tables.

- You may create an unlogged table using the syntax CREATE UNLOGGED TABLE tableName.

- You may convert a logged Table to an unlogged table using the syntax ALTER tableName SET UNLOGGED.

- You may convert an Unlogged Table to a logged table using the syntax ALTER tableName SET LOGGED.

References

PostgreSQL documentation – Create Table SQL Commands

Optimizing client applications

TCP_NODELAY

By default, TCP uses Nagle's algorithm which optimizes by batching up outgoing packets. This means fewer sends and this works well if the application sends packets frequently and latency is not the highest priority. Latency gains can be realized by sending on sockets created with the TCP_NODELAY option enabled. This results in lower latency but more sends. TCP_NODELAY is a client-side setting that should be considered on a client Virtual Machine (VM). Applications that benefit from the TCP_NODELAY option typically tend to do smaller infrequent writes and are particularly sensitive to latency. As an example, latency can be reduced from 15-40 ms to 2-3 ms with this setting.

CPU exhaustion

If CPU usage/CPU percent for an Azure Database for PostgreSQL server is saturated at 100 percent, first use pg_stat_statements in PostgreSQL to determine which queries are taking longest. If after optimizing the long running queries CPU usage is still high, consider scaling up to the next vCore tier. For example, if the CPU usage is hovering around 100 percent consistently for General Purpose 4 vCore, scale up to a General Purpose 8 vCore.

High CPU usage is not the only indicator of CPU bottleneck. Single-threaded applications can also result in CPU exhaustion of one CPU while the other CPUs are under-utilized. Consider parallelizing your workload to take advantage of all the vCores available.

Optimizing pg_stat_statements

Pg_stat_statements is a PostgreSQL extension that is enabled by default in Azure Database for PostgreSQL. The extension provides a means to track execution statistics of all SQL statements executed by a server. However, this module hooks into every query execution and comes with a non-trivial performance cost. Enabling pg_stat_statements forces query text writes to files on disk.

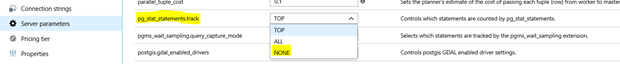

For customers that have unique queries with long query text or are not actively monitoring pg_stat_statements, we recommend disabling pg_stat_statements for best performance by setting "pg_stat_statements.track" to none.

On some customer workloads we have seen up to a 50 percent performance improvement by disabling ps_stat_statements. However, the tradeoff one makes by disabling pg_stat_statements is the inability to troubleshoot performance issues.

To set pg_stat_statements.track to NONE:

- In the Azure portal, navigate to the PostgreSQL resource management page and select the server parameters blade.

- Using CLI

az postgres server configuration set –name pg_stat_statements.track –resource-group myresourcegroup –server mydemoserver –value NONE.

Optimizing query time with toast table storage strategy

There are four different strategies to store toast-able columns on disk representing various combinations between compression and out-of-line storage. The strategy can be set at the level of data type and at the column level.

- Plain prevents either compression or out-of-line storage; furthermore, it disables use of single-byte headers for varlena types. This is the only possible strategy for columns of non-toast-able data types.

- Extended allows both compression and out-of-line storage. This is the default for most toast-able data types. Compression will be attempted first, then out-of-line storage if the row is still too big.

- External allows out-of-line storage but not compression. Use of External will make substring operations on wide text and bytea columns faster, at the penalty of increased storage space, because these operations are optimized to fetch only the required parts of the out-of-line value when it is not compressed.

- Main allows compression but not out-of-line storage. Actually, out-of-line storage will still be performed for such columns, but only as a last resort when there is no other way to make the row small enough to fit on a page.

If your queries access toast-able data types, consider using Main instead of the default Extended option to reduce query times. Main does not preclude out-of-line storage. On the other hand, if your queries do not access toast-able data types, it might be beneficial to retain the Extended option. So a bigger portion of the rows of the main table will fit in the shared buffer cache, helping performance.

If you have a workload using a schema with wide tables and high character counts, consider using PostgreSQL toast tables. An example customer table had 350 plus columns with several columns spanning 255 characters. Their benchmark query time reduced from 4203 seconds to 467 seconds, an 89 percent improvement, after converting the toast strategy.

References

PostgreSQL documentation – Chapter 66, Database Physical Storage.

Acknowledgements

Special thanks to Saikat Sen, Sunil Kamath, Fady Sedrak, Raymond Martin, Sameer Arora, Kamil Kedzia, Rachel Agyemang, Harini Gupta, Jan Engelsberg, Bhavin Gandhi, Arun Sundaram and Michal Primke for their contributions to this posting.

We hope that you will take advantage of the Azure Database for PostgreSQL service. Please continue to provide feedback on the features and functionality that you want to see next. Start today by creating your free Azure account, and then create an instance of Azure Database for PostgreSQL. If you need any help or have questions, please check out the Azure Database for PostgreSQL documentation. You can also reach out to us by using the Ask Azure DB for PostgreSQL alias, and be sure to follow us on Twitter @AzureDBPostgres and #postgresql for the latest news and announcements.

Source: Azure Blog Feed