Query Azure Storage analytics logs in Azure Log Analytics

Log Analytics is a service that collects telemetry and other data from a variety of sources and provide a query language for advanced analytics. After you post logging data to Log Analytics workspace with HTTP Data Collector API, you are able to query logs for troubleshooting, visualize the data for monitoring, or even create alerts based on log search. For more details, see Log Analytics.

Tighter integration with Log Analytics makes troubleshooting storage operations much easier. In this blog, we share how to convert Azure Storage analytics logs and post to Azure Log Analytics workspace. Then, you can use analysis features in Log Analytics for Azure Storage (Blob, Table, and Queue). The major steps include:

- Create workspace in Log Analytics

- Convert Storage Analytics logs to JSON

- Post logs to Log Analytics workspace

- Query logs in Log Analytics workspace

- Visualize log query in Log Analytics workspace

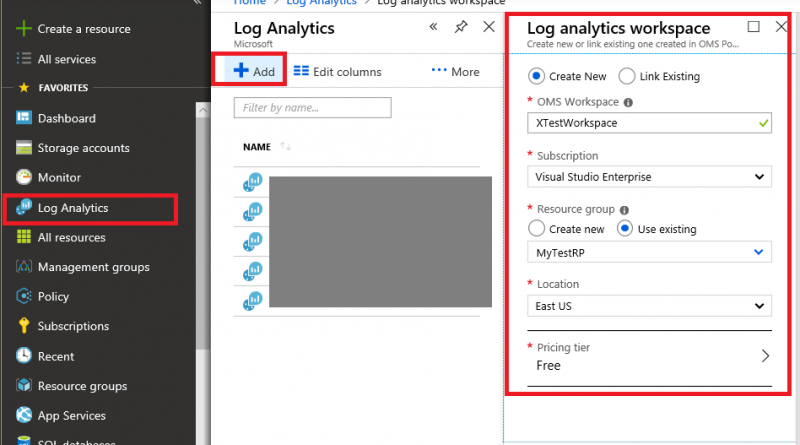

Create workspace in Log Analytics

First, you will need to create a workspace in Log Analytics. The following screenshot shows how to create it in Azure Portal.

Convert Storage Analytics logs to JSON

Azure Storage provides analytics logs for Blob, Table, and Queue. The analytics logs are stored as blobs in "$logs" container within the same storage account. The blob name pattern looks like "blob/2018/10/07/0000/000000.log." You can use Azure Storage Explorer to browse the structures and log files. The following screenshot shows the structure in Azure Storage Explorer:

In each log file, each line is one log record for one request divided by semicolon. You can find schema definition in Storage Analytics Log Format. The following log record shows as a sample:

1.0;2014-06-19T22:59:23.1967767Z;GetBlob;AnonymousSuccess;200;17;16;anonymous;;storagesample;blob;"https://storagesample.blob.core.windows.net/sample-container1/00001.txt";"/storagesample/sample-container1/00001.txt";61d2e3f6-bcb7-4cd1-a81e-4f8f497f0da2;0;192.100.0.102:4362;2014-02-14;283;0;354;23;0;;;""0x8D15A2913C934DE"";Thursday, 19-Jun-14 22:58:10 GMT;;"WA-Storage/4.0.1 (.NET CLR 4.0.30319.34014; Win32NT 6.3.9600.0)";;"44dfd78e-7288-4898-8f70-c3478983d3b6"

Before the next step, we need to convert log records to JSON. You can use any script to convert but the following cases are required to handle during conversion:

- Semicolon mark in the columns: Some columns like request-url or user-agent-header may contain semicolon mark. It should be encoded before split with semicolon. After split, it should be decoded back.

- Quote mark in the columns: Some columns like client-request-id or etag-identifier may contain quote mark. While adding quote mark for other key values, it should be ignored for these columns.

- Use the column definition as key name from Storage Analytics Log Format, so you easily query with them in Log Analytics. Examples: version-number, or request-start-time.

The following sample shows what a prepared JSON looks like for one log record:

[{"version-number":"1.0","request-start-time":"2018-10-07T20:00:52.4036565Z","operation-type":"CreateContainer","request-status":"ContainerAlreadyExists","http-status-code":"409","end-to-end-latency-in-ms":"6","server-latency-in-ms":"6","authentication-type":"authenticated","requester-account-name":"testaccount","owner-account-name":"testaccount","service-type":"blob","request-url":"https://testaccount.blob.core.windows.net/insights-metrics-pt1m?restype=container","requested-object-key":"/testaccount/insights-metrics-pt1m","request-id-header":"99999999-c01e-0085-4a78-000000000000","operation-count":"0","requester-ip-address":"100.101.102.103:12345","request-version-header":"2017-04-17","request-header-size":"432","request-packet-size":"0","response-header-size":"145","response-packet-size":"230","request-content-length":"0","request-md5":"","server-md5":"","etag-identifier":"","last-modified-time":"","conditions-used":"","user-agent-header":"Azure-Storage/8.4.0 (.NET CLR 4.0.30319.42000; Win32NT 6.2.9200.0)","referrer-header":"","client-request-id":"88888888-ed38-4a90-bb3f-000000000000"}]

Post logs to Log Analytics workspace

Azure Log Analytics provides HTTP Data Collector API to post custom log data Log Analytics workspace. You can follow the sample code in the article to send the log json payload prepared in the last step.

Post-LogAnalyticsData -customerId $customerId -sharedKey $sharedKey -body ([System.Text.Encoding]::UTF8.GetBytes($json)) -logType $logType

Query logs in Log Analytics workspace

If you create record type like MyStorageLogs1 in posting logs, you will use MyStorageLogs1_CL as stream name to query. The following screenshot shows how to query imported Storage analytics logs in Log Analytics.

Visualize log query in Log Analytics

If you want to aggregate the query result and look for the pattern or trend, it can be achieved with query and visualization easily in Log Analytics. The following screenshot shows how to visualize query result:

Sample code

A sample Powershell script is provided to show how to convert Storage Analytics log data to JSON format and post the JSON data to a Log Analytics workspace.

Next steps

Read more to continue learning about Storage Analytics and Log Analytics, and sign up for an Azure create a Storage account.

When you read log data from Storage account, there is a cost from read operations. For detailed pricing, visit Blobs pricing. Also note, Log Analytics charges you based on Azure Monitor pricing.

Azure Storage is working with the Azure Monitor team in unifying the logging pipeline. We hope to have built-in integration with Log Analytics for Azure Storage logs soon, and we will keep you posted when the plan is determined. If you have any feedback or suggestions, you can email Azure Storage Analytics Feedback.

Source: Azure Blog Feed