SAP on Azure Architecture – Designing for performance and scalability

This is the second in a four-part blog series on designing a SAP on Azure Architecture. In the first part of our blog series we have covered the topic of designing for security. Robust SAP on Azure Architectures are built on the pillars of security, performance and scalability, availability and recoverability, and efficiency and operations. This blog will focus on designing for performance and scalability.

Microsoft support in network and storage for SAP

Microsoft Azure is the eminent public cloud for running SAP applications. Mission critical SAP applications run reliably on Azure, which is a hyperscale, enterprise proven platform offering scale, agility, and cost savings for your SAP estate.

Microsoft Azure is the eminent public cloud for running SAP applications. Mission critical SAP applications run reliably on Azure, which is a hyperscale, enterprise proven platform offering scale, agility, and cost savings for your SAP estate.

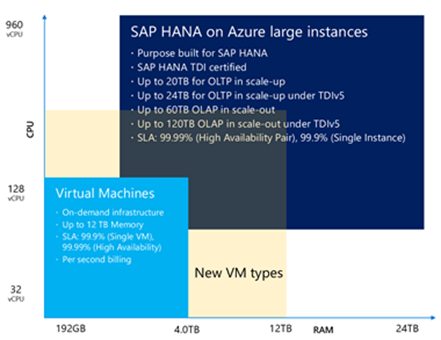

With the largest portfolio of SAP HANA certified IaaS cloud offerings customers can run their SAP HANA Production scale-up applications on certified virtual machines ranging from 192GB to 6TB of memory. Additionally, for SAP HANA scale-out applications such as BW on HANA and BW/4HANA, Azure supports virtual machines of 2TB memory and up to 16 nodes, for a total of up to 32TB. For customers that require extreme scale today, Azure offers bare-metal HANA large instances for SAP HANA scale-up to 20TB (24TB with TDIv5) and SAP HANA scale-out to 60TB (120TB with TDIv5).

Our customers such as CONA Services are running some of the largest SAP HANA workloads of any public cloud with a 28TB SAP HANA scale out implementation.

Designing for performance

Performance is a key driver for digitizing business processes and accelerating digital transformation. Production SAP applications such as SAP ERP or S/4HANA need to be performant to maximize efficiency and ensure a positive end-user experience. As such, it is essential to perform a detailed sizing exercise on compute, storage and network for your SAP applications on Azure.

Designing compute for performance

In general, there are two ways to determine the proper size of SAP systems to be implemented in Azure, by using reference sizing or through the SAP Quick Sizer.

For existing on-premises systems, you should reference system configuration and resource utilization data. The system utilization information is collected by the SAP OS Collector and can be reported via SAP transaction OS07N as well as the EarlyWatch Alert. Similar information can be retrieved by leveraging any system performance and statistics gathering tools. For new systems, you should use SAP quick sizer.

Within the links below you can also attain the network and storage throughput per Azure Virtual Machines type:

Designing highly performant storage

In addition to selecting an appropriate database virtual machine based on the SAPS and memory requirements, it is important to ensure that the storage configuration is designed to meet the IOPS and throughput requirements of the SAP database. Be mindful, that the chosen virtual machine has the capability to drive IOPS and throughput requirements. Azure premium managed disks can be striped to aggregate IOPS and throughput values, for example 5 x P30 disks would offer 25K IOPS and 1000 MB/s throughput.

In the case of SAP HANA databases, we have published a storage configuration guideline covering production scenarios and also a cost-conscious non-production variant. Following our recommendation for production will ensure that the storage is configured to successfully pass all SAP HCMT KPIs, it is imperative to enable write accelerator on the disks associated with the /hana/log volume as this facilitates sub millisecond writes latency for 4KB and 16KB blocks sizes.

Ultra Disks is designed to deliver consistent performance and low latency for I/O-intensive workloads such as SAP HANA and any database (SQL, Oracle, etc.) With ultra disk you can reach maximum virtual machine I/O limits with a single Ultra DISKS, without having to stripe multiple disks as is required with premium disks.

Ultra Disks is designed to deliver consistent performance and low latency for I/O-intensive workloads such as SAP HANA and any database (SQL, Oracle, etc.) With ultra disk you can reach maximum virtual machine I/O limits with a single Ultra DISKS, without having to stripe multiple disks as is required with premium disks.

At September 2019, Azure Ultra Disk Storage is generally available in East US 2, South East Asia, North Europe regions. and supported on DSv3 and ESv3 VM types. Refer to the FAQ for the latest on supported VM sizes for both Windows and Linux OS hosts. This video demonstrates the leading performance of Ultra Disk Storage.

Designing network for performance

As the Azure footprint grows, a single availability zone may span multiple physical data centers, which can result in network latency impacting your SAP application performance. A proximity placement group (PPG) is a logical grouping to ensure that Azure compute resources are physically located close to each and achieving the lowest possible network latency i.e. co-location of your SAP Application and Database VMs. For more information, refer to our detailed documentation for deploying your SAP application with PPGs.

We recommend you consider PPGs within your SAP deployment architecture and that you enable Accelerated Networking on your SAP Application and Database VMs. Accelerated Networking enables single root I/O virtualization (SR-IOV) to your virtual machine which improves networking performance, bypassing the host from the data-path. SAP application server to database server latency can be tested with ABAPMeter report /SSA/CAT.

ExpressRoute Global Reach allows you to link ExpressRoute circuits from on-premise to Azure in different regions together to make a private network between your on-premises networks. Global Reach can be used for your SAP HANA Large Instance deployment to enable direct access from on-premise to your HANA Large Instance units deployed in different regions. Additionally, GlobalReach can enable direct communication between your HANA Large Instance units deployed in different regions

Designing for scalability

With Azure Mv2 VMs, you can scale up to 208 vCPUs/6TB now and 12 TB shortly. For databases that require more than 12 TB, we offer SAP HANA Large Instances (HLI), purpose-built bare metal offering that are dedicated to you. The server hardware is embedded in larger stamps that contains HANA TDI certified compute, network and storage infrastructure, in various sizes from 36 Intel CPU cores/768 GB of memory up to a maximum size of 480 s CPU cores and 24 TB of memory.

Azure global regions at HyperScale

Azure has more global regions than any other cloud provider, offering the scale needed to bring applications closer to users around the world, preserving data residency, and offering comprehensive compliance and resiliency options for customers. As of Q3 2019, Azure spans a total of 54 regions and is available in 140 countries.

Customers like the Carlsberg Group, transformed IT into a platform for innovation through a migration to Azure centered on its essential SAP applications. The Carlsberg migration to Azure encompassed 700 servers and 350 applications—including the essential SAP applications—involving 1.6 petabytes of data, including 8 terabytes for the main SAP database.

Within this blog we have touched upon several topics relating to designing highly performant and scalable architectures for SAP on Azure.

As customers embark on their SAP to Azure journey, in order to methodically deploy highly performant, and scalable architectures, during various phases of the deployment, it is recommended to deep dive into , the SAP on Azure documentation to deepen their understanding of using Azure for hosting and running their SAP applications. The SAP workload on Azure planning and deployment checklist can be used as a compass to navigate through the various phases of a customer’s SAP Greenfield deployment or on-premises to Azure migration project.

In blog #3 in our series we will cover Designing for Availability and Recoverability.

Source: Azure Blog Feed