Preview: Live transcription with Azure Media Services

Azure Media Services provides a platform with which you can broadcast live events. You can use our APIs to ingest, transcode, and dynamically package and encrypt your live video feeds for delivery via industry-standard protocols like HTTP Live Streaming (HLS) and MPEG-DASH. You can also use our APIs to integrate with CDNs and deliver to millions of concurrent viewers. Customers are using this platform for scenarios ranging from multi-day sporting events and entire seasons of professional sports, to webinars and town-hall meetings.

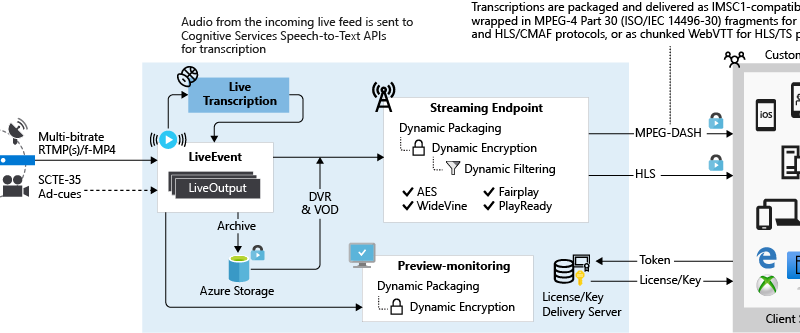

Live transcriptions is a new preview feature in our v3 APIs, wherein you can enhance the streams delivered to your viewers with machine-generated text that is transcribed from spoken words in the audio feed. This feature is an option you can enable for any type of Live Event that you create in our service, including pass-through Live Events, where you configure a live encoder upstream to generate and push a multiple bitrate live feed into the service (visualized in the diagram below).

Figure 1. Schematic diagram for live transcription

When a live contribution feed is sent to the service, it extracts the audio signal, decodes it, and calls to the Azure Cognitive Services speech-to-text APIs to get the speech transcribed. The resultant text is then packaged into formats that are suitable for delivery via streaming protocols. For HTTP Live Streaming (HLS) protocol with media packaged into MPEG Transport Stream (TS) fragments, the text is packaged into WebVTT fragments. For delivery via MPEG-DASH or HLS with CMAF protocols, the text is wrapped in IMSC1.1 compatible TTML, and then packaged into MPEG-4 Part 30 (ISO/IEC 14496-30) fragments.

You can use Azure Media Player (version 2.3.3 or newer) to play the video, as well as display the text on a wide variety of browsers and devices. You can also play back the streams on the iOS native player. If building an app for Android devices, playback of transcriptions has been verified by NexPlayer. You can contact them to request a demo.

Figure 2. Display of live transcription on Azure Media Player

For HTTP Live Streaming (HLS) protocol with media packaged into MPEG Transport Stream (TS) fragments, the text is packaged into WebVTT fragments. For delivery via MPEG-DASH or HLS with CMAF protocols, the text is wrapped in IMSC1.1 compatible TTML, and then packaged into MPEG-4 Part 30 (ISO/IEC 14496-30) fragments.

The live transcription feature is now available in preview in the West US 2 region. Read the full article here to learn how to get started with this preview feature.

Source: Azure Blog Feed