Accelerate your AI applications with Azure NC A100 v4 virtual machines

Real-world AI has revolutionized and changed how people live during the past decade, including media and entertainment, healthcare and life science, retail, automotive, finance service, manufacturing, and oil and gas. Speaking to a smart home device, browsing social media with recommended content, or taking a ride with a self-driving vehicle is no longer in the future. With the ease of your smartphone, you can now deposit checks without going to the bank? All of these advances have been made possible through new AI breakthroughs in software and hardware.

At Microsoft, we host our deep learning inferencing, cognitive science, and our applied AI services on the NC series instances. The learnings and advancements made in these areas with regard to our infrastructure are helping drive the design decisions for the next generation of NC system. Because of our approach, our Azure customers are able to benefit from our internal learnings.

We are pleased to announce that the next generation of NC A100 v4 series is now available for preview. These virtual machines (VMs) come equipped with NVIDIA A100 80GB Tensor Core PCIe GPUs and 3rd Gen AMD EPYC™ Milan processors. These new offerings improve the performance and cost-effectiveness of a variety of GPU performance-bound real-world AI training and inferencing workloads. These workloads cover object detection, video processing, image classification, speech recognition, recommender, autonomous driving reinforcement learning, oil and gas reservoir simulation, finance document parsing, web inferencing, and more.

The NC A100 v4-series offers three classes of VM ranging from one to four NVIDIA A100 80GB PCIe Tensor Core GPUs. It is more cost-effective than ever before, while still giving customers the options and flexibility they need for their workloads.

|

Size |

vCPU |

Memory (GB) |

GPUs (NVIDIA A100 80 GB Tensor Core) |

Azure Network (Gbps) |

|

Standard_NC24ads_A100_v4 |

24 |

220 |

1 |

20 |

|

Standard_NC48ads_A100_v4 |

48 |

440 |

2 |

40 |

|

Standard_NC96ads_A100_v4 |

96 |

880 |

4 |

80 |

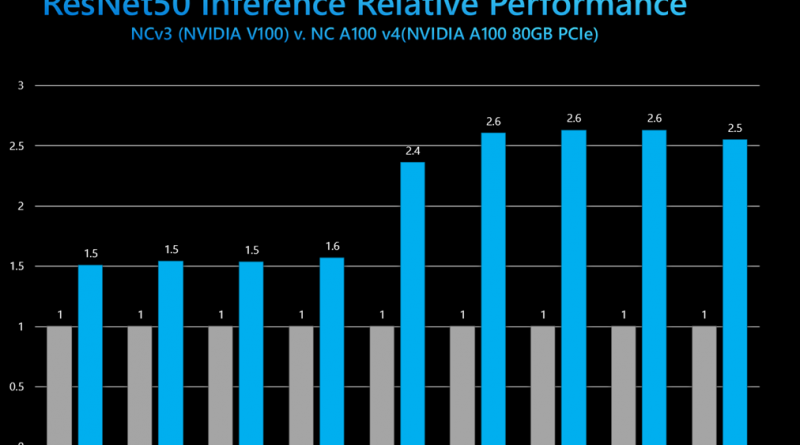

Compared to the previous NC generation (NCv3) with NVIDIA Volta architecture-based GPUs, customers will experience between 1.5 and 2.5 times the performance boost due to:

- Two times GPU to host bandwidth.

- Four times vCPU cores per GPU VM.

- Two times RAM per GPU VM.

- Seven independent GPU instances on a single NVIDIA A100 GPU through Multi-Instance GPU (MIG) on Linux OS.

Below is a sample of what we experienced while running ResNet50 AI model training across a variety of batch sizes using the VM size NC96ads_A100_v4 compared to the existing NCv3 4 V100 GPUs VM size NC24s_v3. Tests were conducted across a range of batch sizes, from one to 256.

Figure 1: ResNet50 results were generated using NC24s_v3 and NC96ads_A100_v4 virtual machine sizes.

For additional information on how to run this on Azure and additional results please check out our performance technical community blog.

With our latest addition the NC series, you can reduce the time it takes to train your model training in around half the time and still within budget. You can seamlessly apply the trained cognitive science models to applications through batch inferencing, run multimillion atomics biochemistry simulations for next-generation medicine, host your web and media services in the cloud for tens of thousands of end-users, and so much more.

Learn more

The NC A100 v4 series are currently available in the South Central US, East US, and Southeast Asia Azure regions. They will be available in additional regions in the coming months.

For more information on the Azure NC A100 v4-series, please see:

- Sign up for the preview of the NVIDIA A100 Tensor Core PCIe GPU in the Azure NC A100 v4-series.

- Performance of NC A100 v4-series.

- Find out more about high-performance computing (HPC) in Azure.

- Microsoft documentation for NC A100 v4-series VM.

- Azure HPC optimized OS images.

- Azure GPU virtual machines.

Source: Azure Blog Feed