MLOPs Blog Series Part 3: Testing scalability of secure machine learning systems using MLOps

The capacity of a system to adjust to changes by adding or removing resources to meet demand is known as scalability. Here are some tests to check the scalability of your model.

System testing

System tests are carried out to test the robustness of the design of a system for given inputs and expected outputs (for example, an MLOps pipeline, inference). Acceptance tests (to fulfill user requirements) can be performed as part of system tests.

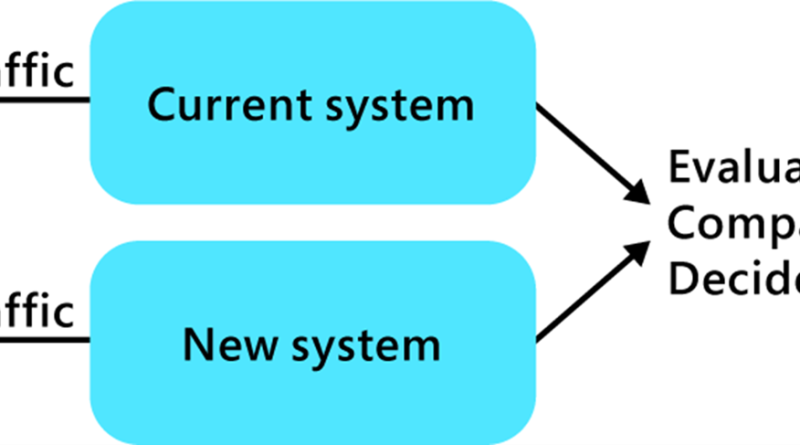

A/B testing

A/B testing is performed by sending production traffic to alternate systems that will be evaluated. Statistical hypothesis testing is used to decide which system is better.

Figure 1: A/B testing

Canary testing

Canary testing is done by delivering the majority of production traffic to the current system while sending traffic from a small group of users to the new system we're evaluating.

Figure 2: Canary testing

Shadow testing

Sending the same production traffic to various systems is known as shadow testing. Shadow testing is simple to monitor and validates operational consistency.

Figure 3: Shadow testing

Load testing

Load testing is a technique for simulating a real-world load on software, applications, and websites. Load testing simulates numerous users using a software application to simulate the expected usage of the program. It measures the following:

• Endurance: Whether an application can resist the processing load, it is expected to have to endure for an extended period.

• Volume: The application is subjected to a large volume of data to test whether the application performs as expected.

• Stress: Assessing the application's capacity to sustain a specified degree of efficacy in adverse situations.

• Performance: Determining how a system performs in terms of responsiveness and stability under a particular workload.

• Scalability: Measuring the application's ability to scale up or down as a reaction to an increase in the number of users.

Load tests can be performed to test the above factors using various software applications. Let’s look at an example of load testing an AI microservice using locust.io. The dashboard in Figure 4 reflects the total requests made to the microservice per second as well as the response times. Using these insights, we can gauge the performance of the AI microservice under a certain load.

Figure 4: Load testing using Locust.io

Learn more

To learn more about the implementation of the above test, watch this demo video and view the code of load testing AI microservices using locust.io. You can check out the code on the load testing microservices GitHub repository. For further details and to learn about hands-on implementation, check out the Engineering MLOps book, or learn how to build and deploy a model in Azure Machine Learning using MLOps in the “Get Time to Value with MLOps Best Practices” on-demand webinar.

Source: Azure Blog Feed