World-class PyTorch support on Azure

Today we are excited to strengthen our commitment to supporting PyTorch as a first-class framework on Azure, with exciting new capabilities in our Azure Machine Learning public preview refresh. In addition, our PyTorch support extends deeply across many of our AI Platform services and tooling, which we will highlight below.

During the past two years since PyTorch's first release in October 2016, we've witnessed the rapid and organic adoption of the deep learning framework among academia, industry, and the AI community at large. While PyTorch's Python-first integration and imperative style have long made the framework a hit among researchers, the latest PyTorch 1.0 release brings the production-level readiness and scalability needed to make it a true end-to-end deep learning platform, from prototyping to production.

Four ways to use PyTorch on Azure

Azure Machine Learning service

Azure Machine Learning (Azure ML) service is a cloud-based service that enables data scientists to carry out end-to-end machine learning workflows, from data preparation and training to model management and deployment. Using the service's rich Python SDK, you can train, hyperparameter tune, and deploy your PyTorch models with ease from any Python development environment, such as Jupyter notebooks or code editors. With Azure ML's deep learning training features, you can seamlessly move from training PyTorch models on your local machine to scaling out to the Azure cloud.

You can install the Python SDK with this simple line.

pip install azureml-sdk

To use the Azure ML service, you will need an Azure account and access to an Azure subscription. The first thing needed is to create an Azure Machine Learning workspace. A workspace is a centralized resource that manages your other Azure resources and training runs.

ws = Workspace.create(name='my-workspace',

subscription_id='<azure-subscription-id>',

resource_group='my-resource-group')

To fully take advantage of PyTorch, you will need access to at least one GPU for training, and a multi-node cluster for more complex models and larger datasets. Using the Python SDK, you can easily take advantage of Azure compute for single-node and distributed PyTorch training. The below code snippet will create an autoscaled, GPU-enabled Azure Batch AI cluster.

provisioning_config = BatchAiCompute.provisioning_configuration(vm_size="STANDARD_NC6",

autoscale_enabled=True,

cluster_min_nodes=0,

cluster_max_nodes=4)

compute_target = ComputeTarget.create(ws, provisioning_config)

From here, all you need is your training script. The following example will create an Azure ML experiment to track your runs and execute a distributed 4-node PyTorch training run.

experiment = Experiment(ws, "my pytorch experiment")

pt_estimator = PyTorch(source_directory='./my-training-files',

compute_target=compute_target,

entry_script='train.py',

node_count=4,

process_count_per_node=1,

distributed_backend='mpi',

use_gpu=True)

experiment.submit(pt_estimator)

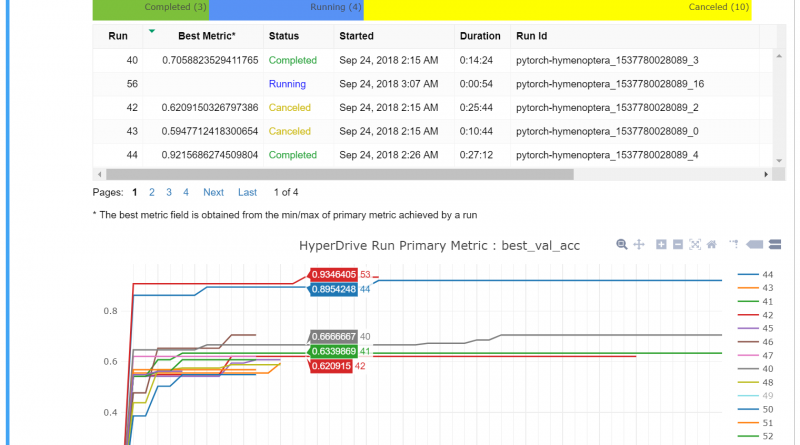

To further optimize your model's performance, Azure ML provides hyperparameter tuning capabilities to sweep through various hyperparameter combinations. Azure ML will schedule your training jobs, early terminate poor-performing runs, and provide rich visualizations of the tuning process.

# parameter sampling

param_sampling = RandomParameterSampling(

"learning_rate": loguniform(-10, -3),

"momentum": uniform(0.9, 0.99)

)

# early termination policy

policy = BanditPolicy(slack_factor=0.15, evaluation_interval=1)

# hyperparameter tuning configuration

hd_run_config = HyperDriveRunConfig(estimator=my_estimator,

hyperparameter_sampling=param_sampling,

policy=policy,

primary_metric_name='accuracy',

primary_metric_goal=PrimaryMetricGoal.MINIMIZE,

max_total_runs=100,

max_concurrent_runs=10,

)

experiment.submit(hd_run_config)

Finally, data scientists and engineers use the Python SDK to deploy their trained PyTorch models to Azure Container Instances or Azure Kubernetes Service.

You can check out a comprehensive overview of Azure Machine Learning's full suite of offerings as well as access complete tutorials on training and deploying PyTorch models in Azure ML.

Data Science Virtual Machine

Azure also provides the Data Science Virtual Machine (DSVM), a customized VM specifically dedicated for data science experimentation. The DSVM comes preconfigured and preinstalled with a comprehensive set of popular data science and deep learning tools, including PyTorch. The DSVM is a great option if you want a frictionless development experience when building models with PyTorch. To utilize the full features of PyTorch, you can use a GPU-based DSVM, which comes pre-installed with the necessary GPU drivers and GPU version of PyTorch. The DSVM is pre-installed with the latest stable PyTorch 0.4.1 release, and it can easily be upgraded to the PyTorch 1.0 preview.

Azure Notebooks

Azure Notebooks is a free, cloud-hosted Jupyter Notebooks solution that you can use for interactive coding in your browser. We preinstalled PyTorch on the Azure Notebooks container, so you can start experimenting with PyTorch without having to install the framework or run your own notebook server locally. In addition, we provide a maintained library of the official, up-to-date PyTorch tutorials on Azure Notebooks. Learning or getting started with PyTorch is as easy as creating your Azure account and cloning the tutorial notebooks into your own library.

Visual Studio Code Tools for AI

Visual Studio Code (VS Code) is a popular and lightweight source code editor. VS Code Tools for AI is a cross-platform extension that provides deep learning and AI experimentation features for data scientists and developers using the IDE. Tools for AI is tightly integrated with the Azure Machine Learning service, so you can submit PyTorch jobs to Azure compute, track experiment runs, and deploy your trained models all from within VS Code.

The Tools for AI extension also provides a rich set of syntax highlighting, automatic code completion via IntelliSense, and built-in documentation search for PyTorch APIs.

Microsoft contributions to PyTorch

Finally, we are excited to join the amazing community of PyTorch developers in contributing innovations and enhancements to the PyTorch platform. Some of our immediate planned contributions are improving PyTorch data loading and processing, which includes improving performance, data reading support for Hidden Markov Model Toolkit- (HTK) defined formats for speech datasets, and a data loader for Azure Blob Storage. In addition, we are working to achieve complete parity for PyTorch Windows support, and full ONNX coverage adhering to the ONNX standard. We will also be working closely with Microsoft Research (MSR) on incorporating MSR innovations into PyTorch.

At Microsoft we are dedicated to embracing and contributing to the advancements of such open-source AI technologies. Just a year ago we began our collaboration with Facebook on the Open Neural Network Exchange (ONNX), which promotes framework interoperability and shared optimization of neural networks through the ONNX model representation standard. With our commitment to PyTorch on Azure, we will continue to expand our ecosystem of AI offerings to enable richer development in research and industry.

Source: Azure Blog Feed