Microsoft empowers developers with new and updated Cognitive Services

The blog post was authored by Andy Hickl, Principal Group Program Manager, Microsoft Cognitive Services.

Today at the Build 2018 conference, we are unveiling several exciting new innovations for Microsoft Cognitive Services on Azure.

At Microsoft, we believe any developer should be able to integrate the best AI has to offer into their apps and services. That’s why we started Microsoft Cognitive Services three years ago – and why we continue to invest in AI services on Azure today.

Microsoft Cognitive Services make it easy for developers to easily add high-quality vision, speech, language, knowledge and search technologies in their apps — with only a few lines of code. Cognitive Services make it possible for anyone to create intelligent apps, including ones that can talk to users naturally, identify relevant content in images, and confirm someone’s identity using their voice or appearance.

At Build this year with Cognitive Services, we're offering even more services and more innovation with announcements such as a unified Speech service and Bing Visual Search, as well as the expansion of our Cognitive Services Labs of emerging technologies. We’re also empowering developers to customize the pre-built AI offered by Cognitive Services, with customized object detection, added features for Bing Custom Search (with custom image search, custom autosuggest,..) and new speech features with custom translator, custom voice, custom speech, and more. We’re also bringing AI to developers everywhere by making Cognitive Services available on all of the computing platforms developers build on today, with the ability to export Custom Vision models to support deployments on edge and mobile devices. Lastly, we also have some of the most powerful bot tools in the market to create more natural human and computer interactions.

To date more than a million developers have already discovered and tried Microsoft Cognitive Services, including top companies such as British Telecom, Box, KPMG, Big Fish Games, and through an API integration with PubNub.

Cognitive Services updates

Let’s talk in detail about some of the exciting new updates coming to Cognitive Services at Build.

Vision

- Computer Vision unlocks the knowledge stored in images. It now integrates an improved OCR model for English (in preview), and captioning expanded to new languages (Simplified Chinese, Japanese, Spanish and Brazilian Portuguese).

- Custom Vision (in preview) makes it easy to build and refine customized image classifiers that can identify specific content in images. Today, we’re announcing that Custom Vision now performs object recognition as well. Developers can now use Custom Vision to train models that can recognize the precise location of specific objects in images. In addition, developers can now download Custom Vision models in three formats: TensorFlow, CoreML, and ONNX.

- Video Indexer, the Cognitive Service that extracts insights from videos, can now be connected to an Azure account.

Speech & Machine Translation

- We’re announcing a new unified Speech service in preview. Developers will now have access to a single-entry point to perform:

- Speech to Text (speech transcription) – converting spoken audio to text with standard or custom models tailored to specific vocabulary or speaking styles of users (language model customization), or to better match the expected environment, such as with background noise (acoustic model customization). Speech to Text technology enables a wide range of use cases like voice commands, real-time transcriptions, and call center log analysis.

- Text to Speech (speech synthesis) – bringing voice to any app by converting text to audio in near real time with the choice of over 75 default voices, or with the new custom voice models, creating a unique and recognizable brand voice tuned to your own recordings.

- Speech Translation – providing real-time speech translation capabilities with models based on neural machine translation (NMT) technologies. Three elements of the Speech Translation pipeline can now be customized through a dedicated tool: speech recognition, text to speech and machine translation.

- We’re also announcing the availability of the Speech devices SDK in preview by invitation (a pre-packaged software fine-tuned to specific hardware) as well as the new Speech client SDK in preview (bringing together the various speech features, support for multiple platforms and different language bindings).

- We continue to build on our amazing machine translation Cognitive Services. We’re announcing support for the customization of neural machine translation. In addition, we’re making significant updates to the Microsoft Translator text API (version 3), unveiling a new version of the service natively using Neural Machine Translation. We also announced a new Microsoft Translator feature for Android that will make it possible to integrate translation capabilities into any app – even without an Internet connection.

Language

- Text Analytics, a cloud-based service that provides advanced natural language processing over raw text, performing sentiment analysis, key phrase extraction and language detection. Today, Text Analytics introduces the ability to perform entity identification and linking from raw text. Entity linking recognizes and disambiguates well-known entities found in text such as people, places, organizations, and more while linking to more information on the web.

- Our Language Understanding (LUIS) service simplifies the speech to intent process with a new integrated offer.

Knowledge

- QnA Maker is a service that makes it possible respond to user's questions in a more natural, conversational way. Today, we’re announcing that QnA Maker is Generally Available on Azure.

Search

Search has been another key area of AI investment: we’re empowering developers to leverage it through multiple search APIs. Today we’re announcing several major updates for Bing Search APIs:

- Bing Visual Search, a new service in general availability that lets you harness the capabilities of Bing Search to perform intelligent search by image capabilities, such as searching similar products for a given product (e.g. in retail scenario, wherein user would like to see products similar to the one being considered), identifying barcodes, text, celebrities and many more! We’re also announcing a preview by invite portal for intelligent Visual Search.

- Bing Statistics-add-in, an add-in which provides various metrics and rich slicing and dicing capabilities for several Bing APIs

- Bing Custom Search updates including custom image search, custom autosuggest, specific statistics information, instance staging and sharing capabilities;

- The Bing APIs SDK now in general availability.

Labs

- We’re also announcing new Cognitive Services labs, providing developers with an early look at the emerging Cognitive Services technologies, such as:

- Project Answer Search – enables you to enhance your users’ search experience by automatically retrieving and displaying commonly known facts and information from across the internet.

- Project URL Preview – informs your users’ social interactions by enabling creation of web page previews from a given URL or flagging adult content to suppress it.

- Project Anomaly Finder enables developers to monitor their data over time and detect anomalies by automatically applying a statistical model.

- Project Conversation Learner by invite – enables you to build and teach conversational interfaces that learn directly from example interactions. Spanning a broad set of task-oriented use cases, Project Conversation Learner applies machine learning behind the scenes to decrease manual coding of dialogue control logic.

- Project Personality Chat – available soon by invitation– makes intelligent agents more complete and conversational by handling common small talk in a consistent tone and fallback responses. Give your agent a personality by choosing from multiple default personas, and enhance it to create a character that aligns with your brand voice.

- Project Ink Analysis – available soon by invitation- provides digital ink recognition and layout analysis through REST APIs. Using various machine learning techniques, the service analyzes the ink stroke input and provides information about the layout and contents of those ink strokes. Project Ink Analysis can be used for a variety of scenarios that involve ink to text as well as ink to shape conversions.

Let’s take a closer look at what some of these new announced services can do for you.

Object Detection with the Custom Vision service

The Custom Vision service preview is an easy-to-use, customizable web service that learns to recognize specific content in imagery, powered by state-of-the-art machine learning neural networks that become more accurate with training. You can train it to recognize whatever you choose, whether that be animals, objects, or abstract symbols. This technology could easily apply to retail environments for machine-assisted product identification, or in digital space to automatically help sorting categories of pictures.

With today’s update, it’s now possible to easily create custom image classification and object recognition models.

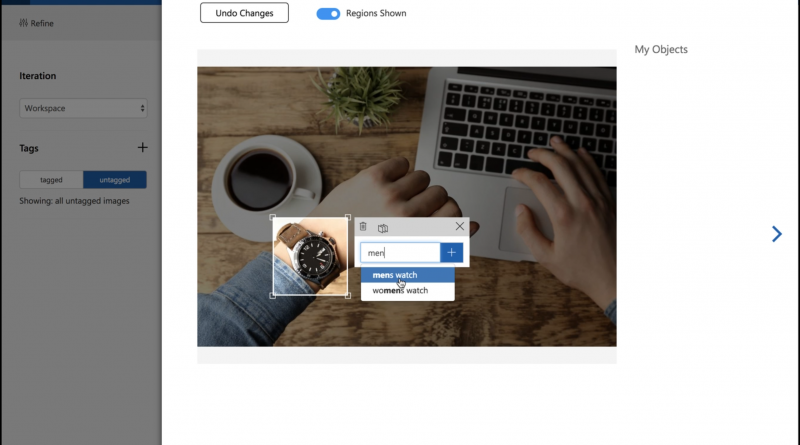

Using the Custom Vision service portal, you can upload and annotate images, train image classification models, and run the classifier as a Web service. Custom Vision now supports custom object recognition as well. You can use the Custom Vision Portal to find – and label – specific objects within an image. You’ll be able to create bounding boxes to specify the exact location of the objects you’re most interested in each image.

An easy way to label objects within the image with bounding box

Last but not least, thanks to Custom Vision’s latest updates, it’s also possible to export the trained model to use in a container which can be deployed on-premises, mobile devices and even IoT.

We created fresh tutorials to easily get started with Custom Vision:

- Feel free to explore in the documentation, Feel free to explore the C# tutorial and Python tutorial of a basic Windows application to create an object detection project. This includes creating both console application and custom vision service project, as well as adding tags, upload various images to the project and identifying the regions of the object by using coordinates and tag. You can also find the detailed guide to export your model as a container.

- Feel free to get started on the Custom Vision service portal and webpage.

Build your own one-of-a-kind Custom Voice with the new Speech service

Part of the unified Speech service preview announced today at Build, the text-to-speech service allows you to create a unique recognizable voice from your own acoustic data (currently for English and Chinese). The possibilities are endless: smart IVR, bot or application that could use iconic brand voice on the go, without the need of deep expertise in data science.

In order to proceed, you’ll be guided through the process of developing the necessary audio datasets and transcripts, and uploading them in the Custom Voice portal (accessible by invite).

Once it’s done, you will train the service and create a new voice model, which will be deployed into a Text-to-Speech endpoint.

Custom Voice customization tool

For more information about the new Custom Voice capabilities of the new Speech service, please take a look at the following resources:

- The Text-to-Speech webpage and custom voice portal

- The detailed technical guide to build your own custom voice.

Perform smart product search with Bing Visual Search

Bing Visual Search provides insights with a similar experience to the image details shown on Bing.com/images. For example, you can upload a picture and get back search insights about the image such as visually similar images, shopping sources, webpages that include the image, and more.

Instead of uploading an image, you can also provide a URL of the image along with the optional crop box to guide your visual search.

For example, you can receive visually similar images (a list of images that are visually and semantically similar to the input image or regions within) or visually similar products (a list of images that contain products that are visually and semantically similar to the products in the input image, you can restrict this to your domain), and more.

Similar image results with Bing Visual Search, powered by Bing

The Bing Visual Search results also provide bounding boxes for regions of interest in the image. For example, if the image contains celebrities, the results may include bounding boxes for each of the recognized celebrities in the image. Similarly, if Bing recognizes a fashion object in the image, the result may provide a bounding box for the recognized product.

When making a Bing Visual Search API request, if there are insights available for the image, the response will contain one or more tags that contain the insights, such as webpages that include the image, visually similar images, and shopping sources for items found in the image.

For more information about Bing Visual Search, please take a look at:

- The Bing Visual Search webpage

- Detailed technical walkthrough in the documentation

Thank you again and happy coding!

Andy

Source: Azure Blog Feed