Azure HDInsight now supports Apache Spark 2.3

Apache Spark 2.3.0 is now available for production use on the managed big data service Azure HDInsight. Ranging from bug fixes (more than 1400 tickets were fixed in this release) to new experimental features, Apache Spark 2.3.0 brings advancements and polish to all areas of its unified data platform.

Data engineers relying on Python UDFs get 10 times to a 100 times more speed, thanks to revamped object serialization between Spark runtime and Python. Data Scientist will be delighted by better integration of Deep Learning frameworks like TensorFlow with Spark Machine Learning pipelines. Business Analysts will find liberating availability of fast vectorized reader for ORC file format which finally makes interactive analytics in Spark practical over this popular columnar data format. Developers building real-time applications may be interested in experimenting with new Continuous Processing mode in Spark Structured Streaming which brings event processing latency to millisecond level.

Vectorized object serialization in Python UDFs

It is worth mentioning that PySpark is already fast and takes advantage of the vectorized data processing in core Spark engine as long as you are using DataFrame APIs. This is good news as it represents majority of the use cases if you follow best practices for Spark 2.x. But until Spark 2.3 there was one large exception to this rule. If you processed your data using Python UDFs then Spark would use standard Python Pickle serialization mechanism to pass data back and forth between Java and Python runtimes one row at a time. While Pickle is robust mechanism it’s not efficient in this setting for big data workloads routinely processing Terabytes of data. Starting with version 2.3 Spark opts to use new Arrow serializer which uses columnar data representation in binary format common between Java and Spark runtimes. This enables vectorized processing in modern processors which results in significant performance boost. New functionality is exposed through new Pandas UDF APIs, which also makes it possible to use popular Pandas APIs in your Python UDFs. If you program your UDF using new Pandas UDF APIs you will get significant gain of 10 times to 100 times in performance. New functionality is disabled by default. To enable it set the following property to true:

spark.sql.execution.arrow.enabled = true

Fast native reader for ORC format

Spark support for ORC file format was subpar as compared to competing Parquet. Both of the formats solve the same problem of efficient, column-oriented access to data. But for a long time Spark worked with Parquet files much better than ORC. All of these changes in Spark 2.3.0 where Hortonworks contributed fast native vectorized reader for ORC file format. New native reader speeds up queries by 2x to 5x which puts it in head to head competition with Parquet speeds. This level of performance makes mixed scenarios practical. For example, customers on HDInsight frequently choose ORC format for Interactive Query clusters powered by Hive LLAP technology. Now they can perform Data Science in Spark more efficiently against the shared ORC dataset.

New vectorized reader/writer is fully redesigned code based which not only brings native implementation but also resolves several of the issues of the old code base. Now ORC writer produces compatible version with the latest ORC format, supports predicate push down, resolves issues with use of the format in Structured Streaming jobs. On top of that new ORC format now supports schema evolution which allows user to add/remove columns as the data files added to the table evolve. Unlike Parquet reader, it also supports changes to the column types as long as they can be upcasted to one another, e.g. float -> double. This puts ORC reader ahead of Parquet, but some of the limitations remain. One of them is powerful technique bucketing which is not yet supported in ORC reader and only available in Parquet. In Spark 2.3.0 new native reader is not enabled by default for backwards compatibility reasons. To enable it across the spectrum of use cases change following properties:

spark.sql.orc.impl=native spark.sql.hive.convertMetastoreOrc=true

Continuous processing (experimental) in Structured Streaming

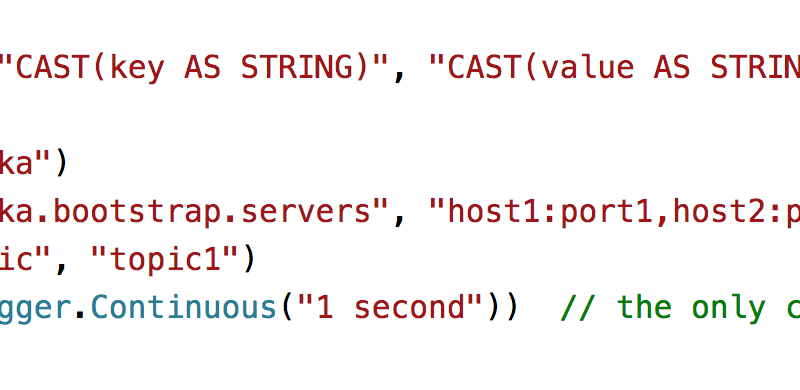

Continuous processing mode is now part of Spark Structured Streaming. This is a new execution engine for streaming jobs which moves away from micro-batching architecture of Spark and brings true streaming processing of individual events. The advantage of new mode is dramatic improvement in the latency of event processing, which can now be as low as sub-millisecond. This is important for latency sensitive use cases such as fraud detection, ad placement and message bus architectures. It is also very easy to enable it in the structured streaming job, just set triggering mode to Trigger.Continuous and the rest of the job remains unchanged.

In addition to be experimental feature there are also significant limitations to this new capability. Only map-type of operations are supported in Continuous processing mode (map-select, filter-where) and delivery guarantees of the message processing are weaker. Standard Micro-batch mode in Structured Streaming supports exactly-once, while Continuous mode only supports at-least-once event processing guarantees.

Other improvements

Stream-to-stream joins

Originally when Structured Streaming was introduced in Spark 2.0 only stream to static joins were supported. This is limiting for a number of scenarios where two streams of data needs to be correlated. With Spark 2.3 it is now possible to join two streams. Watermarks and time constraints can be used to control how much data in two streams will be buffered to perform the join.

Deep learning Integration

Spark can now be used to integrate models from popular Deep Learning library TensorFlow (and Keras) as Spark ML library Transformers. In order to help with that it now also offers built-in functions to read image from file and represent it as a DataFrame.

DataSource API v2 (beta)

One of the strength of Spark is broad support for variety of Datasources. You can connect to Cassandra, HBase, Azure Blob Storage, new Azure Data Lake Storage (gen2), Kafka, or many others and process data in consistent way using Spark. In new version the APIs used to create this data sources get major refactoring. New APIs get rid of dependency on higher level classes such as SparkContext and DataFrame and provide compact and precise interfaces for broader set of primites that enabled more optimizations to be implemented by Datasource developers. Those include: data size, and partitioning info, support for streaming sources as well as batch oriented ones and support for transactional writes. All of these changes are transparent for Spark end users.

Spark on Kubernetes (experimental)

As popularity of Kubernetes clustering framework grows Spark gets native capability to schedule Spark jobs directly on Kubernetes cluster. The jobs need to be specified as Docker image somewhere in the repository although basic image is provided with Spark and can be used to load apps dynamically or as a base for your own custom image.

More details about HDInsight Spark are available. You can also find detailed list of fixed issues in Spark 2.3.0 release notes.

Try HDInsight now

We hope you take full advantage of new Spark capabilities and we are excited to see what you will build with Azure HDInsight. Read this developer guide and follow the quick start guide to learn more about implementing these pipelines and architectures on Azure HDInsight. Stay up-to-date on the latest Azure HDInsight news and features by following us on Twitter #HDInsight and @AzureHDInsight. For questions and feedback, please reach out to AskHDInsight@microsoft.com.

About HDInsight

Azure HDInsight is Microsoft’s premium managed offering for running open source workloads on Azure. Today, we are excited to announce several new capabilities across a wide range of OSS frameworks.

Azure HDInsight powers some of the top customer’s mission critical applications ranging in a wide variety of sectors including, manufacturing, retail education, nonprofit, government, healthcare, media, banking, telecommunication, insurance and many more industries ranging in use cases from ETL to Data Warehousing, from Machine Learning to IoT and many more.

Source: Azure Blog Feed