Schema validation with Event Hubs

Event Hubs is fully managed, real-time ingestion Azure service. It integrates seamlessly with other Azure services. It also allows Apache Kafka clients and applications to talk to Event Hubs without any code changes.

Apache Avro is a binary serialization format. It relies on schemas (defined in JSON format) that define what fields are present and their type. Since it's a binary format, you can produce and consume Avro messages to and from the Event Hubs.

Event Hubs' focus is on the data pipeline. It doesn't validate the schema of the Avro events.

If it's expected that the producer and consumers will not be in sync with the event schemas, there needs to be "source of truth" for the schema tracking, both for producers and consumers.

Confluent has a product for this. It's called Schema Registry. Schema Registry is part of the Confluent’s open source offering.

Schema Registry can store schemas, list schemas, list all the versions of a given schema, retrieve a certain version of a schema, get the latest version of a schema, and it can do schema validation. It has a UI and you can manage schemas via its REST APIs as well.

What are my options on Azure for the Schema Registry?

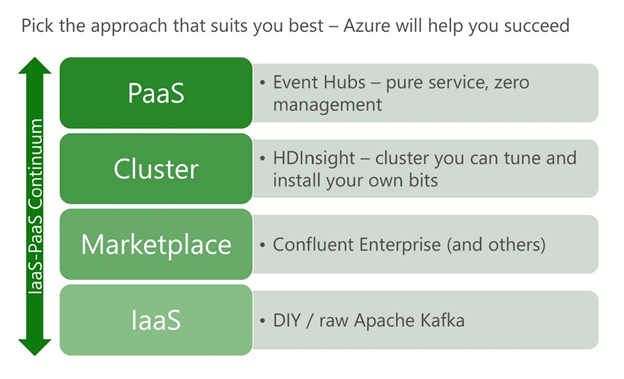

- You can install and manage your own Apache Kafka cluster (IaaS)

- You can install Confluent Enterprise from the Azure Marketplace.

- You can use the HDInsight to launch a Kafka Cluster with the Schema Registry.

I've put together an ARM template for this. Please see the GitHub repo for the HDInsight Kafka cluster with Confluent’s Schema Registry. - Currently, Event Hubs store only the data, events. All metadata for the schemas doesn’t get stored. For the schema metadata storage, along with the Schema Registry, you can install a small Kafka cluster on Azure.

Please see the following GitHub post on how to configure the Schema Registry to work with the Event Hubs. - On a future release, Event Hubs, along with the events, will be able to store the metadata of the schemas. At that time, just having a Schema Registry on a VM will suffice. There will be no need to have a small Kafka cluster.

Other than the Schema Registry, are the any alternative ways of doing the schema validation for the events?

Yes, we can utilize Event Capture feature of the Event Hubs for the schema validation.

While we are capturing the message on a Azure Blob storage or a Azure Data Lake store, we can trigger an Azure Function via a Capture Event. This Function then can custom validate the received message's schema by leveraging the Avro Tools/libraries.

Please see the following for capturing events through Azure Event Hubs into Azure Blob Storage or Azure Data Lake Storage and/or see how to use Event Grid and Azure Functions for migrating Event Hubs data into a data warehouse.

We can also write Spark job(s) that consumes the events from the Event Hubs and validates the Avro messages by the custom schema validation Spark code with the help of org.apache.avro.* and kafka.serializer.* Java packages per say. Please look at this tutorial on how to stream data into Azure Databricks using Event Hubs.

Conclusion

Microsoft Azure is a comprehensive cloud computing service that allows you both the control of IaaS and the higher-level services of PaaS.

After the assessment of the project, if the schema validation is required, one can use Event Hubs PaaS service with a single Schema Registry VM instance, or can leverage the Event Hubs Capture Event feature for the schema validations.

Source: Azure Blog Feed