Introducing incremental enrichment in Azure Cognitive Search

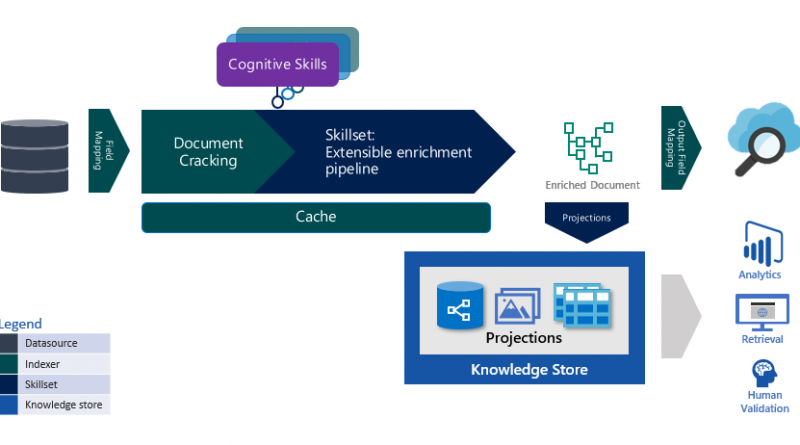

Incremental enrichment is a new feature of Azure Cognitive Search that brings a declarative approach to indexing your data. When incremental enrichment is turned on, document enrichment is performed at the least cost, even as your skills continue to evolve. Indexers in Azure Cognitive Search add documents to your search index from a data source. Indexers track updates to the documents in your data sources and update the index with the new or updated documents from the data source.

Incremental enrichment is a new feature that extends change tracking from document changes in the data source to all aspects of the enrichment pipeline. With incremental enrichment, the indexer will drive your documents to eventual consistency with your data source, the current version of your skillset, and the indexer.

Indexers have a few key characteristics:

- Data source specific.

- State aware.

- Can be configured to drive eventual consistency between your data source and index.

In the past, editing your skillset by adding, deleting, or updating skills left you with a sub-optimal choice. Either rerun all the skills on the entire corpus, essentially a reset on your indexer, or tolerate version drift where documents in your index are enriched with different versions of your skillset.

With the latest update to the preview release of the API, the indexer state management is being expanded from only the data source and indexer field mappings to also include the skillset, output field mappings knowledge store, and projections.

Incremental enrichment vastly improves the efficiency of your enrichment pipeline. It eliminates the choice of accepting the potentially large cost of re-enriching the entire corpus of documents when a skill is added or updated, or dealing with the version drift where documents created/updated with different versions of the skillset and are very different in shape and/or quality of enrichments.

Indexers now track and respond to changes across your enrichment pipeline by determining which skills have changed and selectively execute only the updated skills and any downstream or dependent skills when invoked. By configuring incremental enrichment, you will be able to ensure that all documents in your index are always processed with the most current version of your enrichment pipeline, all while performing the least amount of work required. Incremental enrichment also gives you the granular controls to deal with scenarios where you want full control over determining how a change is handled.

Indexer cache

Incremental indexing is made possible with the addition of an indexer cache to the enrichment pipeline. The indexer caches the results from each skill for every document. When a data source needs to be re-indexed due to a skillset update (new or updated skill), each of the previously enriched documents is read from the cache and only the affected skills, changed and downstream of the changes are re-run. The updated results are written to the cache, the document is updated in the index and optionally, the knowledge store. Physically, the cache is a storage account. All indexes within a search service may share the same storage account for the indexer cache. Each indexer is assigned a unique cache id that is immutable.

Granular controls over indexing

Incremental enrichment provides a host of granular controls from ensuring the indexer is performing the highest priority task first to overriding the change detection.

- Change detection override: Incremental enrichment gives you granular control over all aspects of the enrichment pipeline. This allows you to deal with situations where a change might have unintended consequences. For example, editing a skillset and updating the URL for a custom skill will result in the indexer invalidating the cached results for that skill. If you are only moving the endpoint to a different virtual machine (VM) or redeploying your skill with a new access key, you really don’t want any existing documents reprocessed.

To ensure that that the indexer only performs enrichments you explicitly require, updates to the skillset can optionally set disableCacheReprocessingChangeDetection query string parameter to true. When set, this parameter will ensure that only updates to the skillset are committed and the change is not evaluated for effects on the existing corpus.

- Cache invalidation: The converse of that scenario is one where you may deploy a new version of a custom skill, nothing within the enrichment pipeline changes, but you need a specific skill invalidated and all affected documents re-processed to reflect the benefits of an updated model. In these instances, you can call the invalidate skills operation on the skillset. The reset skills API accepts a POST request with the list of skill outputs in the cache that should be invalidated. For more information on the reset skills API, see the documentation.

Updates to existing APIs

Introducing incremental enrichment will result in an update to some existing APIs.

Indexers

Indexers will now expose a new property:

Cache

StorageAccountConnectionString: The connection string to the storage account that will be used to cache the intermediate results.CacheId: The cacheId is the identifier of the container within the annotationCache storage account that is used as the cache for this indexer. This cache is unique to this indexer and if the indexer is deleted and recreated with the same name, the cacheid will be regenerated. The cacheId cannot be set, it is always generated by the service.EnableReprocessing: Set to true by default, when set to false, documents will continue to be written to the cache, but no existing documents will be reprocessed based on the cache data.

Indexers will also support a new querystring parameter:

ignoreResetRequirement set to true allows the commit to go through, without triggering a reset condition.

Skillsets

Skillsets will not support any new operations, but will support new querystring parameter:

disableCacheReprocessingChangeDetection set to true when you want no updates to on existing documents based on the current action.

Datasources

Datasources will not support any new operations, but will support new querystring parameter:

ignoreResetRequirement set to true allows the commit to go through without triggering a reset condition.

Best practices

The recommended approach to using incremental enrichment is to configure the cache property on a new indexer or reset an existing indexer and set the cache property. Use the ignoreResetRequirement sparingly as it could lead to unintended inconsistency in your data that will not be detected easily.

Takeaways

Incremental enrichment is a powerful feature that allows you to declaratively ensure that your data from the datasource is always consistent with the data in your search index or knowledge store. As your skills, skillsets, or enrichments evolve the enrichment pipeline will ensure the least possible work is performed to drive your documents to eventual consistency.

Next steps

Get started with incremental enrichment by adding a cache to an existing indexer or add the cache when defining a new indexer.

Source: Azure Blog Feed