Scalable management of virtualized RAN with Kubernetes

Among the many important reasons why telecommunication companies should be attracted to Microsoft Azure are our network and system management tools. Azure has invested many intellectual and engineering cycles in the development of a sophisticated, robust framework that manages millions of servers and several hundred thousand network elements distributed in over one hundred and forty countries around the world. We have built tools and expertise to maintain these systems, use AI to predict problem areas and solve them before they become issues, and provide transparency in the performance and efficiency of a very large and complicated system.

At Microsoft, we believe these tools and expertise can be repurposed to manage and optimize telecommunication infrastructure as well. This is because the evolving infrastructure for telecommunication operators includes elements of edge and cloud computing that lend themselves well to global management. In this article, I will describe some of the more interesting technologies that fit into the management of a cloud-based telecommunications infrastructure.

Up and running in just a few clicks

If you want to set up a 5G cellular site, there are a few key requirements. After gathering and interconnecting your hardware (servers, network switches, cables, power supplies, and other components), you then plug in your edge server machines to power and networking outlets. Each machine will be accessible via a standards-based board management controller (BMC) that usually runs a lightweight operating system, Linux, for example, to remotely manage the machine via the network.

When powered up, the BMC will obtain an IP address, most likely from a networked DHCP server. Next, an Azure VPN Gateway will be instantiated—this is a Microsoft Azure-managed service that is deployed into an Azure Virtual Network (VNet), and provides the endpoint for VPN connectivity for point-to-site VPNs, site-to-site VPNs, and Azure ExpressRoute. This gateway is the connection point into Azure from either the on-premises network (site-to-site) or the client machine (point-to-site). Using private VNet peering allows Azure to talk to the BMC on each machine.

Once this is working, the network operator can enable scripts that talk to the BMC via Azure to run automatically and can install the basic input/output system (BIOS) and proper software operating system (OS) images on the machine. Once these edge machines have an OS, a Kubernetes (K8s) cluster can be created, encompassing multiple machines by using tools such as Kubeadm. The K8s cluster is connected to Microsoft Azure Arc so that workloads can be scheduled onto the cluster using Azure APIs.

Management via Azure Arc

Microsoft Azure Arc is a set of technologies that extend Azure management to any infrastructure, enabling the deployment of Azure data services anywhere. Specifically, Azure management can be extended to Linux and Windows physical and virtual servers, and to K8s clusters so Azure data services can run on any K8s infrastructure. In this way, Azure Arc provides a unified management experience across the entire telecommunications infrastructure estate, whether it’s on-premises, in a public cloud, or in multiple public clouds.

This creates a single pane view and automation control plane of its heterogeneous environments, as well as the ability to govern and manage all these resources in a consistent way. Microsoft Azure portal, role-based access control, resource groups, search, and services like Azure Monitor and Microsoft Sentinel are also enabled. Security for next-generation networks, like the ones telecommunications operators are lighting up, is a topic I recently wrote about.

For developers, this unified framework delivers the freedom to use the tools they are familiar with while focusing more on the business logic in their applications. Microsoft Arc along with other existing and new Microsoft technologies and services forms the basis of our Azure Operator Distributed Services which will bring a carrier-grade hybrid cloud service to the market.

However, running radio access network (RAN) functions on a vanilla Arc-connected Kubernetes cluster is difficult. It requires manual and vendor-specific tuning, resource management, and monitoring capabilities, making it difficult to deploy across servers with different specs and to scale as more virtual RAN (vRAN) deployments come up. Therefore, in addition to Microsoft Azure Arc and Azure Operator Distributed Services, we have developed the Kubernetes for Operator RAN (KfOR) framework, which provides extensions that are installed on top of vanilla K8s clusters to specifically enhance the deployment, management, and monitoring of RAN workloads on the cluster. These are the essential components necessary for lighting up the automatic management and self-healing properties of next-generation telecommunication cloud networks, creating an edge platform that turns the vRAN into yet another cloud-managed application.

Kubernetes for Operator RAN (KfOR) extensions for virtualized RAN

To optimally utilize edge server resources and provide reliability, telecommunication RAN network functions (NFs) typically run in containers within a server cluster, utilizing K8s for container orchestration. Although Kubernetes allows us to take advantage of a rich ecosystem of components, there are several challenges related to running high service-level agreements, high-performance, and latency-sensitive RAN NFs in edge datacenters.

For example, RAN NFs run close to the cell tower in the far-edge, which in many cases is owned by the telecommunications operator. Performance requirements for high availability, high performance, and low latency needed by vRAN necessitate the use of single root I/O virtualization(SR-IOV) working with a data plane development kit (DPDK), programmable switches, accelerators, and custom workload lifecycle controllers. This is well beyond what standard K8s offer.

To address these challenges, we have developed KfOR, which patches this hole and enables end-to-end deployment, RAN management, monitoring, and analytics experience through Azure.

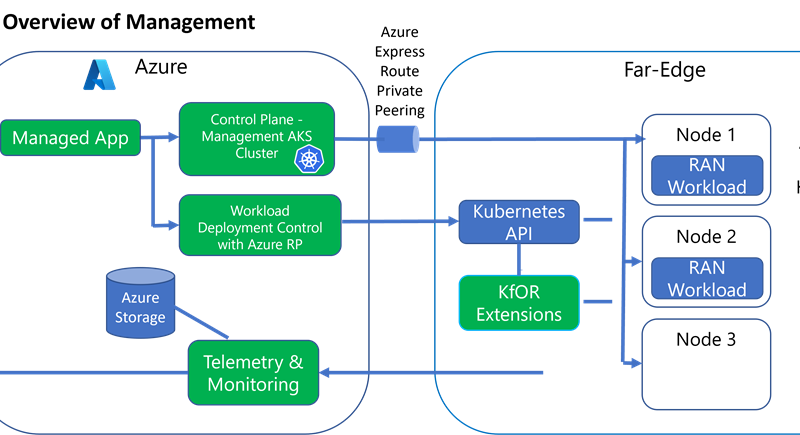

The figure shows how the various components of Azure and Kubernetes (blue) and those developed by the Azure for Operators team (green) fit together. Specifically, it shows the use of an Azure Resource Provider (RP) and an Azure Managed App, which allows the spin-up of a Management Azure Kubernetes Service (AKS) cluster on Azure. This control-plane management cluster can then utilize open source and in-house developed components to deploy and manage the edge cluster (the Azure Arc–enabled Kubernetes workload cluster).

The control plane manages both the provisioning of the bare-metal nodes on the workload cluster, as well as the Kubernetes components running on these nodes. Within the workload cluster, KfOR provides custom Kubernetes extensions to simplify the development, deployment, management, and monitoring of multi-vendor NFs. KfOR utilizes extension points available in Kubernetes such as custom controllers, DaemonSets, mutating webhooks, and custom runtime hooks. Here are some examples of its capabilities:

- Container suspension capability. KfOR can create pods that have containers that start in a suspended state but can be automatically activated in the future. This capability can be used for creating "warm standbys," which means these pods can immediately replace active pods that unfortunately fail, reducing downtime from several seconds to under one. In addition, this feature can also be used to ensure that pods launch in a predetermined order by specifying pod dependencies. vRAN workloads have some pods that require another pod to have reached a particular state prior to launching.

- Advanced Kubernetes networking stack. KfOR provides an advanced networking library using DPDK and a method to auto-inject this library into any pod using a sidecar container. KfOR also provides a mechanism to autoload this library ahead of the standard sockets library. This allows for code written using standard User Datagram Protocol sockets to achieve microsecond latency using DPDK underneath, without modifying a single line of code.

- Cloud-native user-space eBPF codelets. Extended Berkeley packet filter (eBPF) is used to extend the capabilities of the kernel safely and efficiently without requiring changing the kernel source code or loading kernel modules. KfOR provides a mechanism to submit user-space eBPF codelets to the K8s cluster, as well as a method for insertion of these codelets by using K8s pod annotations. The codelets attach dynamically to hook points in running code in the network functions and can be used for monitoring and analytics.

- Advanced scheduling and management of cluster resources. KfOR provides a K8s device plugin that allows for the scheduling and usage of isolated CPU cores as a resource separate from standard CPU cores. This enables RAN workloads to run on a K8s cluster with no manual configuration, such as pinning threads to predefined cores. KfOR also provides a custom runtime hook to isolate resources so containers cannot use CPUs, network interface controllers, or accelerators that have not been assigned to them.

With these capabilities, we have accomplished one-click deployment of RAN workloads as well as real-time workload migration and defragmentation. As a result, KfOR is able to shut off unused nodes to save energy. KfOR is also able to properly configure programmable switches that are used to route traffic from one server to the next. Furthermore, with KfOR, we can deliver fine-grain RAN analytics, which will be discussed in a future blog.

KfOR goes beyond simple automation. It turns the far-edge into a true platform that treats the vRAN as yet another app that you can install, uninstall, and swap easily with a simple click of a button. It provides APIs and abstractions that allow vRAN vendors to fine-tune their functions for real-time performance without needing to know the details of the bare metal. This is in contrast to existing vRAN solutions that even though virtualized, still treat the vRAN as an appliance, which needs to be manually tuned and is not easily portable across servers with even slightly different configurations.

Deployment of KfOR extensions is completed by using the management cluster to launch the add-ons on the workload cluster. KfOR capabilities can be used by any K8s deployment by simply adding annotations to the workload manifest.

Robust stress-free RAN management

What I have described here is how the full power of preexisting cloud management tools along with the new KfOR technology can be put together to manage, monitor, automate, and orchestrate the near-edge and far-edge machines and software deployed within the emerging telecommunications infrastructure. Once the hardware and network are available, these capabilities can light up a cell site impressively quickly, without any pain, and without requiring deep expertise. KfOR, developed specifically for virtual RAN management, has significant built-in value for our customers. It enables Azure to plug in artificial intelligence for sophisticated automation along with tried-and-true technologies needed for self-managing and self-healing networks. Overall, it creates a differentiation of our offering in the telecommunications and enterprise markets.

Learn more

- Follow us for additional developments in this space and more.

- Learn more about Microsoft Azure Arc and Azure Kubernetes Service (AKS).

- Sign up for Microsoft Azure today.

Source: Azure Blog Feed