Custom Speech: Code-free automated machine learning for speech recognition

Voice is the new interface driving ambient computing. This statement has never been more true than it is today. Speech recognition is transforming our daily lives from digital assistants, dictation of emails and documents, to transcriptions of lectures and meetings. These scenarios are possible today thanks to years of research in speech recognition and technological jumps enabled by neural networks. Microsoft is at the forefront of Speech Recognition with its research results, reaching human parity on the Switchboard research benchmark.

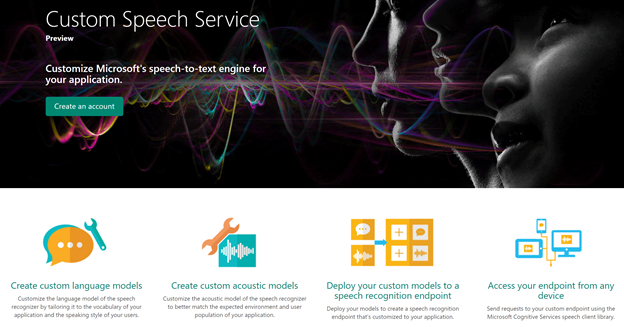

Our goal is to empower developers with our AI advances, so they can build new and transformative experiences for their customers. We offer a spectrum of APIs to address the various scenarios and situations developers encounter. Cognitive Services Speech API gives developers access to state of the art speech models. Premium scenarios, using domain specific vocabulary or complex acoustic conditions, offer Custom Speech Service that enables developers to automatically tune speech recognition models to their specific needs. Our services have been previewed on a wide range of scenarios with customers.

Speech recognition systems are composed of several components. The most important components are the acoustic and language models. If your application contains vocabulary items that occur rarely in everyday language, customizing the language model will significantly improve recognition accuracy. Customers can upload textual data like words and sentences of the target domain to build language models which can then be deployed and accessed by any devices through our Speech API.

University lectures are a typical example as they contain domain specific terminology. In a biology lecture for example we could encounter terms like “Nerodia erythrogaster" which are extremely specific but important to correctly transcribe. Presentation Translator, an add-in to PowerPoint which customizes the language model based on slide content, addresses the lecture or any presentation scenario offering highly accurate transcription results for domain specific audio. This video on getting started with Presentation Translator provides a deeper explanation, and this video demonstrates the Presentation Translator add-in.

Similarly, customizing the acoustic model enables the speech recognition system to be accurate in particular environments. For example, if a voice-enabled app is aimed for use in a warehouse or factory, a custom acoustic model can accurately recognize speech in the presence of loud or persistent background noise. Customers can upload audio data with accompanying transcripts to build acoustic models.

Custom Speech Service enables acoustic and language model adaptation with zero coding. Our user interface guides you through data import, model adaptation, evaluation and optimization through measuring word error rate and tracking improvements. It also guides you through model deployment at scale, so models can be accessed by your application on any devices.

Creating, updating, and deploying models takes only minutes, making it easy to build and iteratively improve your application. To learn and start building your own speech recognition models, visit Custom Speech Service and our Custom Speech Service documentation pages.

Source: Azure Blog Feed